Many times we may encounter tuning or performance related problems where the underlying data access logic is buried or not available to us. The application may for example use an Object Broker or Query Server where SQL statements are simply unknown because they are generated at runtime and may change for each execution. The application’s query generator may be not able to correctly deal with specific constructs (for example, a restriction in the WHERE clause that cannot be resolved through the use of an index) that are used by many SQL statements. This is not the complete nighmare scenario there are still steps you can take to improve perfroamce. In this case, you may have to resort to techniques that solve the problem at the session or system level, not at the SQL statement level.

When you don’t have complete control of the SQL statements the application creates because the code is not available or is generated dynamically at runtime our tuning choices will probably be restricted to optimizing the data access structures (system) or modifying the execution environment (session). Recall the following can only be used to tune or optimize for specific SQL statements. They may not be applicable at the session or system level.

– Alter SQL Statements

– Use of hints

– SQL Profiles

– Stored Outlines

– SQL Plan Baselines

So what do we do?

The same tools Oracle provides that save hundreds of hours of labor intensive manual SQL tuning can also be used in this scenario to help at the system and session level where the code (SQL) is not available. Using these tools you can apply and measure global changes to the database first to potentially tune hundreds of queries at once. By failing to do global tuning first (for example using SQL with optimizer directives) you almost certainly find that subsequent global changes (e.g., optimizer parameter changes) may in effect “un-tune” carefully crafted individual SQL statements Start with system-level tuning and establish a baseline before beginning to tune poorly performing individual SQL statements generated on behalf of the application. This means you should carefully use the following methods executed in the following order.

– Tune Operating System, Disk I/O, and Network environment

– Gather and baseline ORACLE optimizer statistics

– Adjust cost based optimizer parameters and tune to meet site specific needs

– Optimize the Application database instance

– Perform Query Optimization and Tune the SQL Access workload

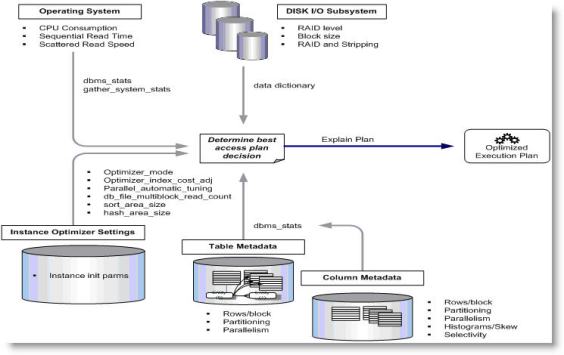

Your best friend in this case is the product’s Cost Based Optimizer model. The query optimizer is directly responsible for the performance of SQL statements The ORACLE optimizer cost model is illustrated in the following high-level diagram.

Note the sensitivity of the cost-based optimizer (CBO) cost model that accounts for I/O and CPU related data as well as table and index metadata. This is why it is important we tune the operating system, disk I/O, and network environment first and then gather and baseline ORACLE optimizer statistics. This cost model allows the optimizer to choose the best execution plans based on statistics of the underlying data. Decisions made by the CBO depend on the integrity of the statistics available at the time that the execution plan is parsed and executed. A common cause of poor performance is bad or outdated statistics in this scenario. Several useful tools for monitoring database performance and activity include the Active Workload Repository (AWR) and Automatic Database Diagnostic Monitor (ADDM). These tools can be used to pinpoint and isolate bad statistics that might be causing the optimizer to make bad decisions in preparing execution plans. Use the V$SYS_OPTIMIZER_ENV view to examine the current values for the optimizer related settings in the database instance. See the following URL for more information about this view:

http://download.oracle.com/docs/cd/B28359_01/server.111/b28320/dynviews_3080.htm

After understanding the common characteristics of the environment and application, then it is time to begin carefully optimizing the application database instance (there is a lot to this as well, will discuss this in a future post). Then and only then are you ready to perform what query optimization you can by tuning the SQL Access workload.

To summarize, the recommended approach to performance optimization for ORACLE when the application product doesn’t provide access to the underlying query engine is to use the following methods executed in the following order.

– Tune Operating System, Disk I/O, and Network environment

– Gather and baseline ORACLE optimizer statistics

– Adjust cost based optimizer parameters and tune to meet site specific needs

– Optimize the Application database instance

– Perform Query Optimization and Tune the SQL Access workload

Performing these steps in this order will maximize the value of the optimization effort and mitigate the risk of altering a global parameter change in the database that will impact the application when you don’t have access to the underlying codebase.